Gradient Descent

Updated: December 1, 2020

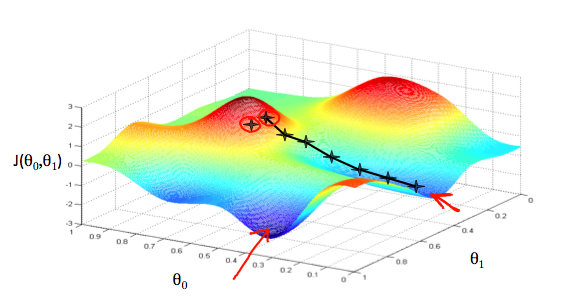

An optimization algorithm for finding the local minimum of a differentiable function.

(The red arrows show the minimums of J(Θ0,Θ1), i.e. the cost function)

To find the minimum of the cost function, we take its derivative and “move along” the tangential line of steepest (negative) descent. Each “step” is determined by the coefficient α, which is called the Learning Rate.

Θjnew:=Θjold−α∂∂ΘjJ(Θ0,Θ1)

Θj, initially, will be a randomly-chosen value. At each iteration of the algorithm, we want to update the “old” parameter Θjold with the newly-calculated value. This process should occur until the slope is zero (i.e. the derivative of the cost function is zero), indicating a minimum.